Autonomous cars gather up tons of data about the world around them, but even the best computer vision systems can’t see through brick and mortar. But by carefully monitoring the reflected light of a laser bouncing off a nearby surface, they might be able to see around corners — that’s the idea behind recently published research from Stanford engineers.

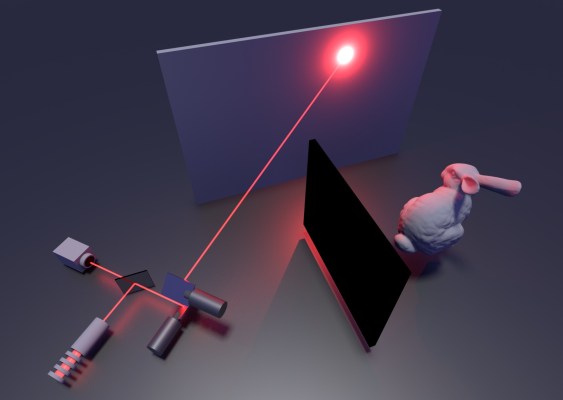

The basic idea is one we’ve seen before: It’s possible to discern the shape of an object on the far side of an obstacle by shining a laser or structured light on a surface nearby and analyzing how the light scatters. Patterns emerge when some pulses return faster than others, or are otherwise changed by having interacted with the unseen object.

It isn’t easy to do. Reflected laser light can easily be lost in the noise of broad daylight, for instance. And if you want to reconstruct a model of the object precise enough to tell whether it’s a person or a stop sign, you need a lot of data and the processing power to crunch that data.

It’s this second problem that the Stanford researchers, from the school’s Computational Imaging Group, address in a new paper published in Nature.

“Despite recent advances, [non-line-of-sight] imaging has remained impractical owing to the prohibitive memory and processing requirements of existing reconstruction algorithms, and the extremely weak signal of multiply scattered light,” the abstract reads in part.

“A substantial challenge in non-line-of-sight imaging is figuring out an efficient way to recover the 3-D structure of the hidden object from the noisy measurements,” said grad student David Lindell, co-author of the paper, in a Stanford news release.

The data collection process still takes a long time, as the laser scans across a surface — think a couple minutes to an hour, though that’s still on the low side for this type of technique. The photons do their thing, bouncing around the other side, and some make it back to nearby their point of origin, where they are picked up by a high-sensitivity detector.

The detector sends its data on to a computer, which processes it using the precious algorithm created by the researchers. Their work allows this part to proceed extremely quickly, reconstructing the scene in relatively high fidelity with just a second or two of processing.

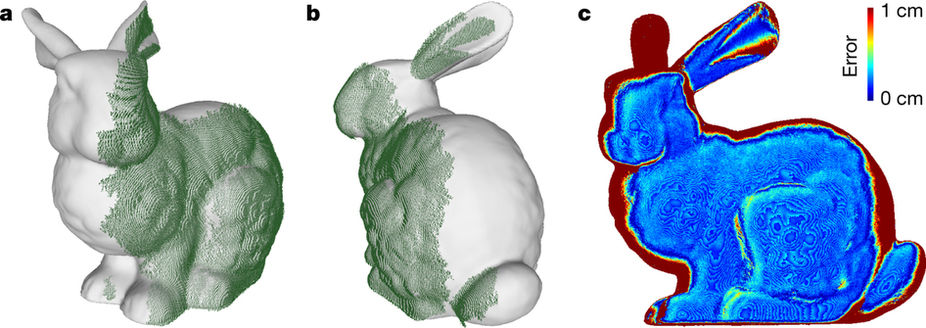

The white model is the actual shape of the unseen item, and the green mesh is what the system detected (from only one side, of course).

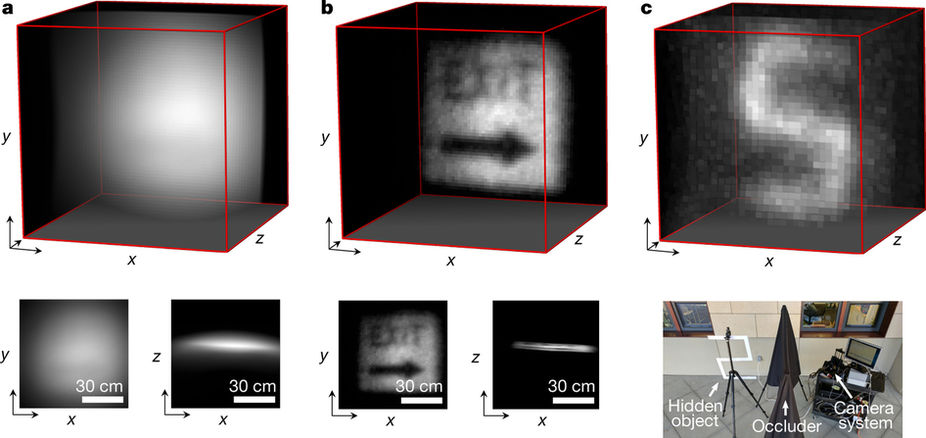

The resulting system is also less susceptible to interference, allowing it to be used in indirect sunlight.

Example of a sign reconstructed from being lasered outside.

Of course, it’s not much use detecting a person on the far side of a wall if it takes an hour to do so. But the laser setups used by the researchers are very different from the high-speed scanning lasers found in lidar systems. And the algorithm they built should be compatible with those, which could vastly reduce the data acquisition time.

“We believe the computation algorithm is already ready for LIDAR systems,” said Matthew O’Toole, co-lead author of the paper (with lab leader Gordon Wetzstein). “The key question is if the current hardware of LIDAR systems supports this type of imaging.”

If their theory is correct, then this algorithm could soon enable existing lidar systems to analyze their data in a new way, potentially spotting a moving car or person approaching an intersection before it’s even visible. It’ll be a while still, but at this point it’s just a matter of smart engineering.